Turbonomic Extends Kubernetes Management Reach

Turbonomic this week extended the reach of its workload automation platform for Kubernetes into the public cloud.

Asena Hertz, senior product marketing manager for Turbonomic, says the company has added support for Amazon Elastic Container Service for Kubernetes (EKS), Microsoft Azure Kubernetes Service (AKS) and Google Kubernetes Engine (GKE). In doing so, Turbonomic ensures that any container-as-a-service (CaaS) environment has the resources required to both meet specific application performance levels as well as comply with any number of regulations.

In addition to support for public clouds, the fall edition of the company’s namesake platform also adds support for Pivotal Container Service (PKS) alongside existing support for other on-premises editions of Kubernetes.

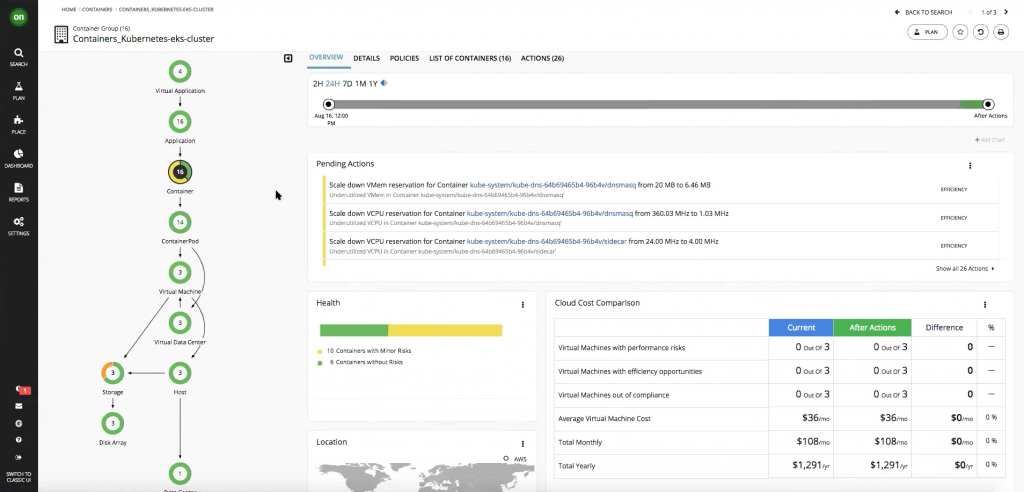

Hertz says the goal is to enable IT organizations to create self-service instances of Kubernetes that leverage a Turbonomic decision engine based on machine learning algorithms to make sure Kubernetes pods have the exact resources needed at any given point in time.

Those capabilities include automated pod rescheduling to optimize container scheduling, applying container platform compliance policies, optimizing container-resource reservation, limiting the number of clusters that can run at once and scaling Kubernetes clusters in and out based on real-time demand. Those capabilities extend the reach of a Turbonomic platform that already provides similar capabilities for virtual machines, Hertz says.

Hertz notes that the rise of microservices based on containers has made IT environments a lot more dynamic. Containers tend to be ephemeral in that they are replaced often, and each microservice based on those containers is often trying to access the same limited amount of underlying compute and storage resources. Addressing that challenge will require more reliance in automation made possible using machine learning algorithms that inject artificial intelligence (AI) into IT operations, she says. It is even arguable that the deployment of containerized applications will force IT operations teams to embrace AI technologies more rapidly than they might normally otherwise. In fact, DevOps teams that tend to be judged more on the rate at which applications are released and updated rather than infrastructure availability most likely will be at the forefront of AI adoption.

Hertz notes that the rise of microservices based on containers has made IT environments a lot more dynamic. Containers tend to be ephemeral in that they are replaced often, and each microservice based on those containers is often trying to access the same limited amount of underlying compute and storage resources. Addressing that challenge will require more reliance in automation made possible using machine learning algorithms that inject artificial intelligence (AI) into IT operations, she says. It is even arguable that the deployment of containerized applications will force IT operations teams to embrace AI technologies more rapidly than they might normally otherwise. In fact, DevOps teams that tend to be judged more on the rate at which applications are released and updated rather than infrastructure availability most likely will be at the forefront of AI adoption.

Thus far, Turbonomic reports that a little more than 10 percent of its existing customer base has deployed containers in a production environment, while another 11 percent are using containers for application lifecycle management. Another 30 percent say they are actively exploring how to employ containers.

In addition, Hertz notes that as instances of Kubernetes become more distributed across hybrid cloud computing environments, the challenges associated with many DevOps across those platforms will require increased reliance on IT automation, because the size of the IT operations team is not likely to increase inside most organizations.

Of course, increased reliance on AI to manage CaaS environments will require adjustments to the IT operations culture. Many IT professionals tend not to trust tools and platforms that automate processes in ways they can’t easily visualize. But as the mystique surrounding AI technologies continues to fade, it’s only a matter of time before AI capabilities within IT management tools become a general expectation.