Kubernetes-as-a-Service: One Step Closer, but Not the End Game

Understanding what Kubernetes-as-a-service can—and can’t—do

Four years ago, Docker democratized the ability for developers to reliably run their applications anywhere. What worked on their computer worked in test/dev and production. Portable and lightweight, containers allowed us to finally realize the benefits of microservice architectures. Hello, developer speed. Then, as developers adopted these architectures, the need for a solution that could orchestrate containerized application components arose. Hello, operator challenge.

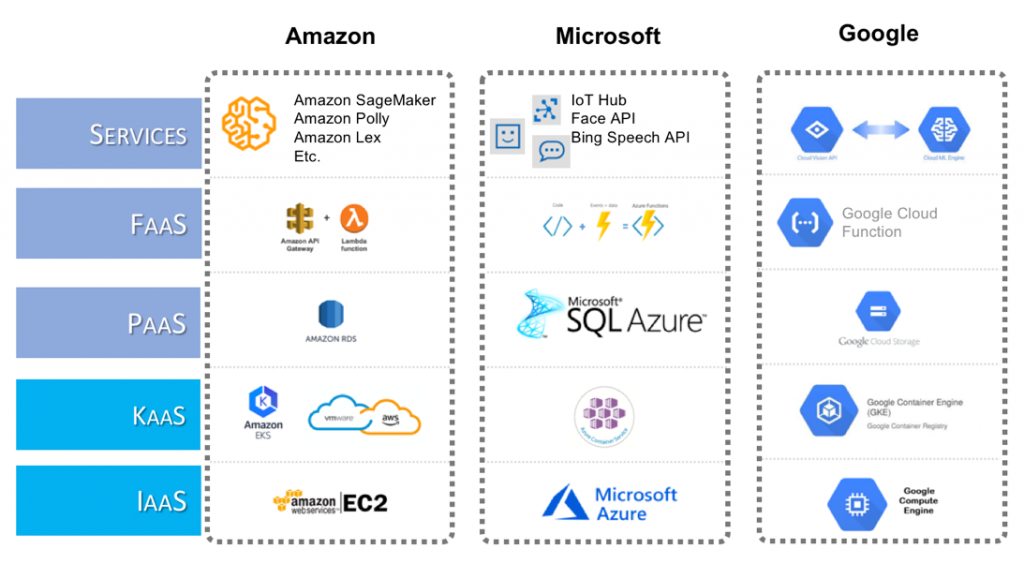

Enter Kubernetes, the elegant container platform that is easy to get started and offers all the promise of portability and elasticity at scale. Which brings us to today, where every major cloud provider now has a Kubernetes-as-a-service offering to enable faster, easier adoption of the Kubernetes platform. But, what’s the end game? In an industry rife with all sorts of shiny objects, it’s all too easy to get lost in all the whats and forget the why.

The End Game, and How Do We Get There?

As Kelsey Hightower of Google Cloud Platform put it: “Here is my application, run it for me, when and where I want it, securely. That’s the end game.” Developers want to focus on building the best applications and services for the business. If they’re thinking about how well, how securely or where those apps are running, they can’t do that. But here’s the other side of that story: Operators can’t reliably do this given today’s level of complexity and scale. Abstracting away the infrastructure doesn’t mean it ceases to exist—it’s just somebody else’s problem. If it’s your problem, you have a critical role in getting to this end game.

So, how do you help your organization get to the end game? Don’t just get software to do what you tell it; get software to make decisions. You define the goal—application SLO and business requirements. The system takes care of the rest.

What Happens When You Don’t Automate Decision-Making?

Exactly what’s happening now. Resource decisions are time-consuming, risky and, frankly, avoidable. You overprovision nodes and clusters because performance is paramount. But, that’s expensive and won’t fly at scale. If you’re in the cloud, paying these overprovisioning costs by the minute, it really hurts. You also configure containers and nodes, defining your autoscaling policies and setting thresholds to manage your clusters. It’s time-consuming and, again, doesn’t scale. These approaches that rely on operators to make decisions, trying to anticipate every scenario are risky. People make mistakes. Finally, the time operators spend configuring and managing the environment could otherwise have been spent on more impactful work, improving internal processes or launching new ones. Imagine what your team could accomplish if these resource decisions were continuously and automatically made for you?

Doesn’t Kubernetes-as-a-Service Do All This?

No, Kubernetes-as-a-service (KaaS) does not mean “full-service.” KaaS offerings are about getting customers onboarded onto the public cloud or Kubernetes distribution more quickly. From there, customers will use (and pay for) the tangential services the provider offers. Below is a basic KaaS architecture:

Doesn’t Autoscaling Solve All My Management Challenges?

No. Remember it’s not about getting software to do what you tell it, but instead getting software to make decisions. The Kubernetes platform is no different from traditional infrastructure in that people make resource decisions. Operators will use thresholds to define autoscaling policies. Autoscaling automates processes—scale a pod out when you hit 80 percent CPU utilization (or maybe it’s memory); don’t allocate more than this much CPU (or memory) to this task; and the containers for service “a” will have “x” CPU and “y” memory, while the containers for service “b” will have different allocations.

At best, just automating processes is time-consuming. At worst, it’s risky—because people make mistakes. And the reality is that thresholds are an either/or approach that cannot resolve trade-offs. Additionally, these policies have no awareness of the existing interdependencies in the environment. What happens if demand increases and two applications on the same node scale out at the same time? Have you defined a policy for which pods need to be rescheduled or where newly deployed pods should be placed? Whether it’s a trade-off between CPU or memory—is my application more compute- or memory-intensive?—or the broader trade-offs between performance, compliance and cost, neither Kubernetes nor KaaS solve this problem.

So, What Does KaaS Solve For?

KaaS offerings speed up your adoption of the Kubernetes platform. It installs and configures the Kubernetes platform for you. It provides the scripts that make it easier to configure and create infrastructure—virtual machines or cloud instances, storage, network (VPN, subnet, access/security, etc.). It makes it easier to interact with the platform through a high-level dashboard or portal. It makes upgrading and security patching easier. Below, is a matrix overview of a few of the main KaaS offerings.

| Key Capabilities | Amazon EKS | Azure AKS | Google GKE | Pivotal PKS |

| Install, configure k8s | ✅ | ✅ | ✅ | ✅ |

| Creates configures infrastructure | ✅ | ✅ | ✅ | ✅ |

| High level Dashboards of Clusters | ✅ | ✅ | ✅ | ✅ |

| Access to CLI, k8s dashboard | ✅ | ✅ | ✅ | ✅ |

| Node scaling: easy node addition | ✅ | ✅ | ✅ | ✅ |

| Node scaling: policies | Auto-scaling: threshold | Availability Set: threshold | Cluster Autoscaler: threshold | BOSH threshold (manual) |

| Easy upgrades | (1.10 only) | ✅ | ✅ | ✅ |

| Differentiated Integrated Services | ✅ | ✅ | ✅ | (GCP) |

| Pricing | $0.20/hr + EC2 costs | Free + Azure VM + Disk costs | Free + GCP VM + Storage costs | Free + GCP costs (only supports GCP, VMware) |

What you might notice from this matrix overview is that there isn’t all that much differentiation between KaaS offerings. Why? Well, if you consider that infrastructure is increasingly commoditized, KaaS might be the modern infrastructure standard, but it, too, falls into the bucket of what needs to be abstracted away so that developers can focus on what matters—building applications that meet business requirements. Part of that is choosing the best services for their applications to meet those unique requirements, be they machine learning models, business analytics, IoT platforms, etc.

The competitive landscape will lie in these application services. When clouds compete on the services they offer, customers win. Would you rather choose a cloud because of existing contracts or skills? Or choose it because it offers the best IoT development platform for your business needs?

Here’s the rub: From the IaaS up, infrastructure is increasingly commoditized, but as we’ve noted you still have to manage it. You still have to navigate the trade-offs that exist between performance, compliance and cost regardless of what type of cloud or KaaS you adopt. Just try and do that across multi-provider infrastructure at scale.

Think Responsibly. Do the Thinking Software Can’t.

If you know that your developers will be using services from multiple clouds (or maybe they already are), it’s important to consider KaaS providers in terms of a few things:

Interoperability

- Do services between clouds work together? Often applications are a composite of services in multiple clouds as well as on-prem.

- Do services within clouds work together? Some cloud providers are still playing catch-up here.

Portability

- Can applications move between providers based on business objectives (ex. cost, latency)?

- Or, is there technical/vendor lock-in? (ex. “data gravity”)

Extensibility

- Does the provider offer APIs?

- Does the provider leverage upstream open source distributions?

So that covers in which platforms you choose to invest. Software can’t make these decisions for you. But, as you adopt these platforms remember that these environments are increasingly complex due to the dynamic and distributed nature of cloud-native architectures. Navigating the continuous trade-offs between performance, cost and compliance is difficult. And, in 2018, it shouldn’t be done by people. Software can and should do it.

One Cloud

We are entering a world where the stack is increasingly abstracted away and commoditized. Clouds will compete on the services developers need for their applications and customers will refuse to be bound to a provider for the wrong reasons. In this world, multi-provider cloud infrastructure will essentially be one cloud to the developer. To achieve the end game—run my application when and where I want it, securely—organizations will need to build systems that think. If the system can think, then you simply define the SLO and business requirements, and it takes care of the rest. You free yourself and your teams to focus on more impactful work.