Trilio Brings Agentless Backup and Recovery to Kubernetes Applications

Trilio this week announced it is making available a platform for protecting Kubernetes environments dubbed TrilioVault for Kubernetes under an early access program.

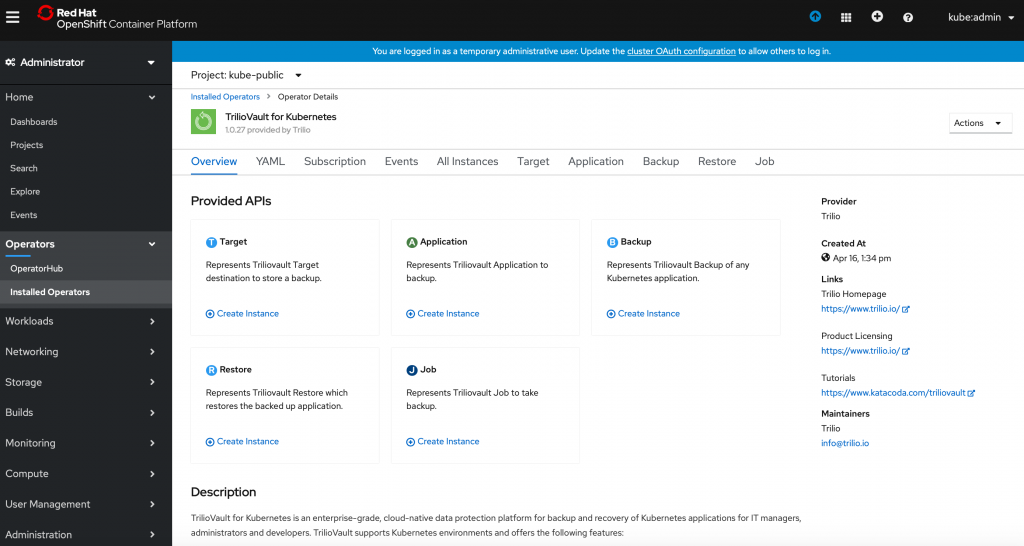

At the same time, Trilio announced TrilioVault for Kubernetes has been certified for Red Hat OpenShift, an application development and deployment platform based on Kubernetes.

Previewed at the recent KubeCon + CloudNativeCon 2019 conference, TrilioVault for Kubernetes is based on agentless architecture that makes application programming interfaces (APIs) to back up and recover an entire Kubernetes application, including data, metadata and any Kubernetes objects associated with the application. It also provides access to monitoring and logging capabilities via support for open source Prometheus and Grafana tools.

Trilio CTO Murali Balcha notes that native support for Kubernetes allows IT teams to restore a complex microservices-based application at any point in time they choose regardless of whether the Kubernetes cluster resides on-premises or in the cloud. Those applications can have been provisioned using Operators, Helm or Labels within a distribution of Kubernetes—a critical capability because all those elements are required to rehydrate a Kubernetes application, he says.

TrilioVault for Kubernetes is offered as a 30-day free trial or a basic edition available for up to 10 nodes within a single cluster free of charge forever. The Enterprise Edition is available with premium support and is priced on a per-node basis for annual contracts.

Trilio already provides support for OpenStack environments as well as virtual machines from Red Hat. Balcha says Trilio envisions IT organizations eventually centralizing the management of backup and recovery across all three open source environments.

Data protection is becoming a more pressing issue in Kubernetes environments as the number of stateful applications deployed on the platform steadily increases. Initially, containers and Kubernetes clusters were employed to run stateless applications, which typically don’t need to be backed up and recovered. In the age of DevOps, it’s now critical in the event of a failure to be able to recover a Kubernetes application as quickly and completely as possible, given all the dependencies that exist between microservices. A well-constructed microservices application might not fail entirely, but there there is certainly going to be a lot of pressure to make certain applications can be fully restored to its optimal level of performance as quickly as possible.

Less clear right now is who on the IT team will manage backup and recovery. As more responsibility for managing IT continues to shift left toward developers, the responsibility for backup and recovery is moving away from IT storage administrators. In other cases, Kubernetes administrators are emerging to manage IT operations, including backup and recovery. Regardless of who is responsible for managing the process, application owners expect data residing on a Kubernetes cluster to be recoverable from any point in time. The real challenge, of course, is making sure all the data required to achieve that goal was backed up in the first place. After all, no matter the age of the platform there’s still no substitute for testing to be certain that any data that has been backed up is actually going to be available when needed most.