Red Hat Extends Reach of Container Storage Platform

Red Hat announced today it has updated its container storage platform to make it easier to access external data sources.

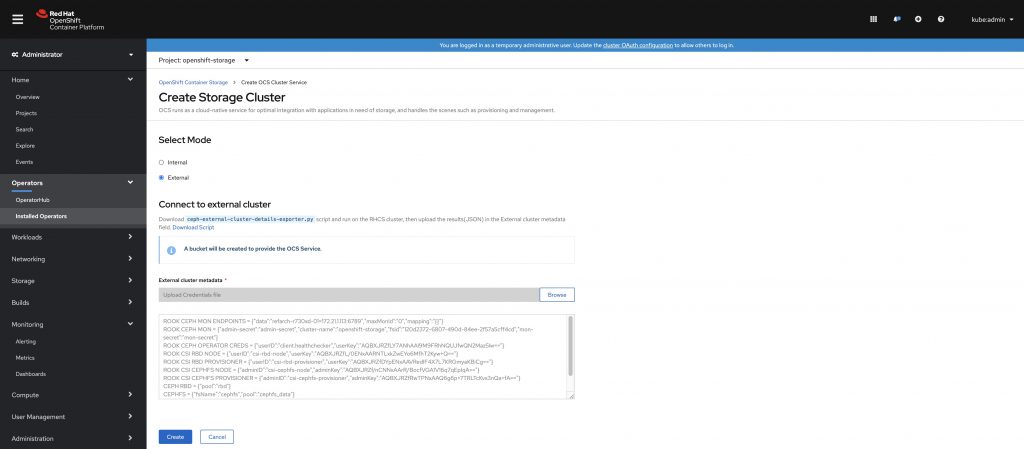

Pete Brey, marketing manager of hybrid cloud object storage at Red Hat, says version 4.5 of Red Hat Container Storage Platform can now provide container applications with access to storage systems based on open source Ceph object storage software. Via that extension, container applications that rely on the Red Hat Container Storage Platform can now access object storage systems alongside file and block systems.

At the same time, Red Hat is extending its support of Amazon S3 bucket by enabling support for notifications. The goal is to enable IT teams to create automated data pipelines to ingest, catalog, rout data in real-time.

Designed to provide access to persistent storage for both containerized applications and application based on virtual machines that are deployed on the Red Hat OpenShift platform, the Red Hat Container Storage Platform enables IT teams to deploy stateful applications in a Kubernetes environment. Support for external storage systems gives an IT team the option of deploying stateless applications that can store outside the Kubernetes cluster, Brey says.

Designed to provide access to persistent storage for both containerized applications and application based on virtual machines that are deployed on the Red Hat OpenShift platform, the Red Hat Container Storage Platform enables IT teams to deploy stateful applications in a Kubernetes environment. Support for external storage systems gives an IT team the option of deploying stateless applications that can store outside the Kubernetes cluster, Brey says.

Legacy applications deployed on Red Hat OpenShift can access storage via Red Hat OpenShift Virtualization, which employs open source kubevirt software to enable virtual machines to be encapsulated within containers. That approach allows both monolithic and microservices applications based on containers to access the same pool of shared storage resources.

Where to store data in a Kubernetes environment has become a hotly debated issue. Some IT teams prefer to store data outside of a Kubernetes cluster, which effectively means all container applications running on the cluster are stateless. Other teams want to centralize the management of storage along with the rest of the cluster by storing data on the cluster itself. Red Hat is staking out a more neutral approach that allows IT teams to pursue both approaches based on individual application requirements, says Brey.

In effect, a hybrid approach to storage is now a requirement. IT organizations routinely now store data in a cloud platform that needs to be accessible to applications as if it were stored locally. At the same time, there are latency issues that require applications to be able to access data stored on a local cluster. The goal is to make the right data available to applications at the right time regardless of where that data is physically stored, Brey notes.

In the case of the Red Hat Container Storage Platform, IT teams can access massive data lakes stored on a public cloud as easily as they do data sitting on an array attached to a cluster. That cloud access capability will also soon be extended to IBM Cloud in the wake of IBM acquiring Red Hat, he added.

It may be a while before the container storage debate is finally settled. However, the easier it is for applications to access data wherever it is located, the more flexible IT environments can be in an era where data is now highly distributed across an extended enterprise.