Dotscience Brings DevOps for AI Platform to Kubernetes

Dotscience today announced it is making available a DevOps platform that accelerates the building and deployment of artificial intelligence (AI) models available on Kubernetes clusters.

Company CEO Luke Marsden says many organizations are embracing cloud-native platforms as they begin to roll out AI models to make it easier to deploy those models in on-premises or public cloud environments. The AI models are deployed as a Docker image running within a Kubernetes cluster.

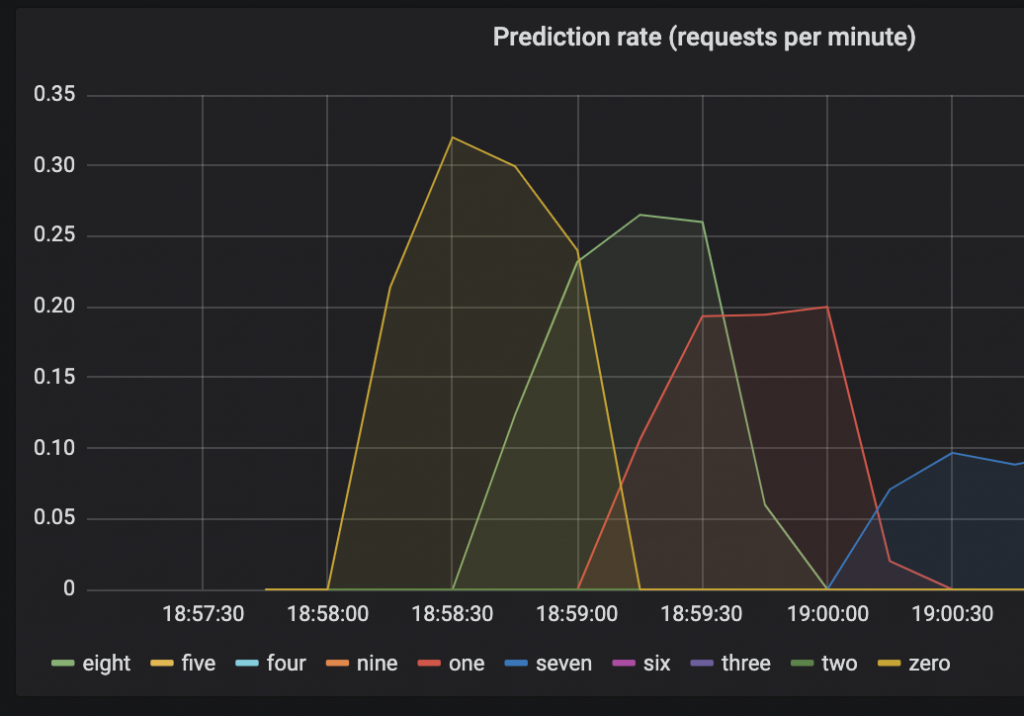

Dotscience is simplifying the process of training AI models and then deploying them on an inference engine through its Dotscience Kubernetes Runner platform. Those models then can be monitored continuously using an instance of Prometheus and Grafana-based dashboards that Grafana is also making available.

Dotscience also announced it has formed an alliance with S&P Global, under which the two companies will collaborate to define a set of best practices for building and deploying AI models. S&P Global chose to work with Dotscience after it discovered that at least half of AI models fail to get past the incubation phase due to issues such as the need to build robust data pipelines and instances of AI models that work on local laptops but fail to execute on servers running in a production environment.

Two of the biggest inhibitors of AI adoption are the fact that most data science teams don’t have a set of repeatable processes in place for building and deploying AI models. Many organizations today are awash in AI models that aren’t deployed with an application because of a lack of defined process for handing off an AI model to a team of application developers.

More challenging still, as new relevant data sources are discovered, many of those models need to be updated, which is difficult to accomplish in the absence of a set of best DevOps practices.

Finally, Marsden notes that many deployed AI models have biases embedded in the model that result in many of them being rolled back after deployment.

Marsden says because of all these issues, DevOps for AI, also known as machine learning (MLOps), is emerging as a discipline with organizations on the leading edge of embracing AI. Dotscience is striving to make it easier to embrace DevOps for AI by providing a continuous integration/continuous deployment platform that manages the building and deployment process. AI models can be deployed via a graphical interface, Python library or a set of command line interface (CLI) tools the company provides.

It’s obviously still early days as far as AI models are concerned. However, given the ambitious scope of many AI projects, organizations need to be able to fail fast should an AI model not live up to expectations. The challenge they face is not just in building the AI model, but also in finding ways to deploy and update those AI models at an industrial scale.