Equinix Metal Adds Cluster API to Tinkerbell Project

Equinix Metal today announced the addition of an application programming interface (API) for provisioning clusters on bare-metal servers to the Tinkerbell open source project. Tinkerbell is a bare-metal provisioning engine used to install operating systems along with other types of software.

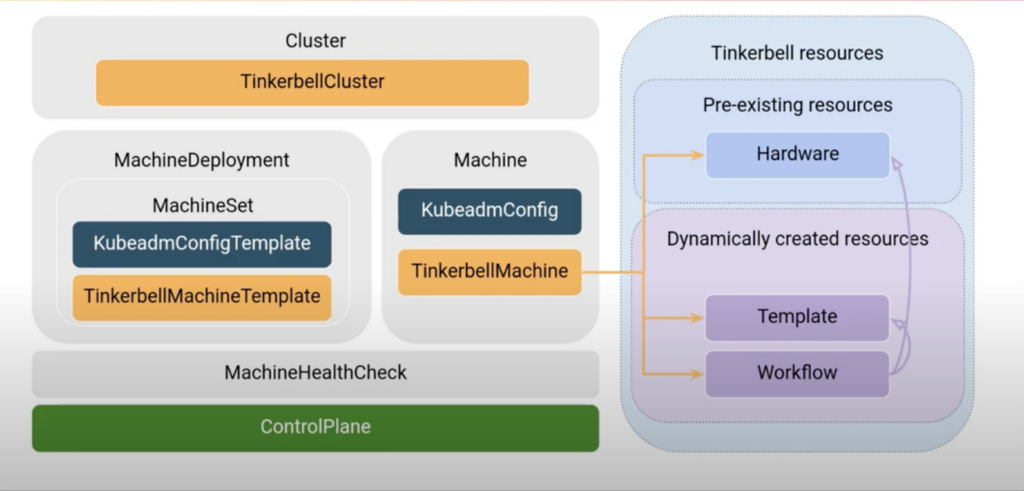

Jeremy Tanner, head of developer relations for Equinix Metal, says the Cluster API for Tinkerbell will enable an IT team to declaratively provision Kubernetes clusters and the underlying operating system directly on a bare-metal server.

In addition, support for Kubernetes types and controllers is also being added to enable Tinkerbell to interact with a Kubernetes cluster via the Kubernetes Resource Model. IT teams can then list Kubernetes resources, monitor resource changes or manage role-based access controls (RBAC) settings directly from Tinkerbell. Previously, IT teams relied on a Postgres backend to manage those tasks. That approach to now slated for deprecation in a future release of Tinkerbell.

Tinkerbell was originally created by Packet, which was acquired by Equinix in 2020. Packet now operates as the Equinix Metal arm of Equinix, which provides hosting and connectivity services.

Instead of using a generic bare metal provisioning engine and bolting a Cluster API on top of it, Tanner said the API is integrated within Tinkerbell. That approach will enable IT teams to, for example, use the cloud-init command to automatically spin up a server that allows them to pull images for provisioning Kubernetes nodes from any container registry that complies with the Open Container Initiative (OCI) specification, he noted.

As a result, operating systems images for provisioning clusters can use the same process used to manage container application images.

Tinkerbell is now also adding support for a lighter-weight Hook tool to provision servers and is considering adding support for integrating PBNJ tools to enable IT teams to, for example, remotely turn machines on and off.

Finally, the Tinkernell community is replacing the versioning scheme for Tinkerbell components. Instead of version numbers, the community is switching to semantic versioning to make it easier to understand which component versions are compatible with each other and then build sandbox environments using those components.

The number of bare-metal servers running Kubernetes is expected to steadily rise in the months ahead, especially as more servers are deployed at the network edge. Many organizations default to deploying Kubernetes on virtual machines simply because they lack the tools to deploy it any other way. As the tools for managing fleets of Kubernetes clusters become more mature, it’s now apparent that IT teams can deploy Kubernetes more easily on either virtual machines or bare metal servers depending on the nature of the application workload.

Regardless of how Kubernetes clusters are deployed, the one thing that is certain is there will soon be a lot more of them running everywhere from the network edge to the cloud. The challenge IT teams now face is how best to manage a highly distributed Kubernetes environment at scale made up of virtual machines and a larger number of bare-metal servers that are more challenging to provision.