What Are Containers and Why Do We Need Them?

Containers have been around since the early 2000s and architected into Linux in 2007. Due to containers’ small footprint and portability, the same hardware can support an exponentially larger number of containers than virtual machines, dramatically reducing infrastructure costs and enabling more apps to deploy faster. But due to usability issues, containers didn’t garner enough interest until Docker came into picture around 2013.

- Linux containers contain applications in isolated fashion to keep them isolated from the host system they run on.

- Containers allow a developer to package up an application with all of the artifacts it needs, such as libraries and other dependencies, and ship it all out as one package.

- Containers provide a consistent experience, as developers and system administrators move code from development environments into production in a fast and replicable way.

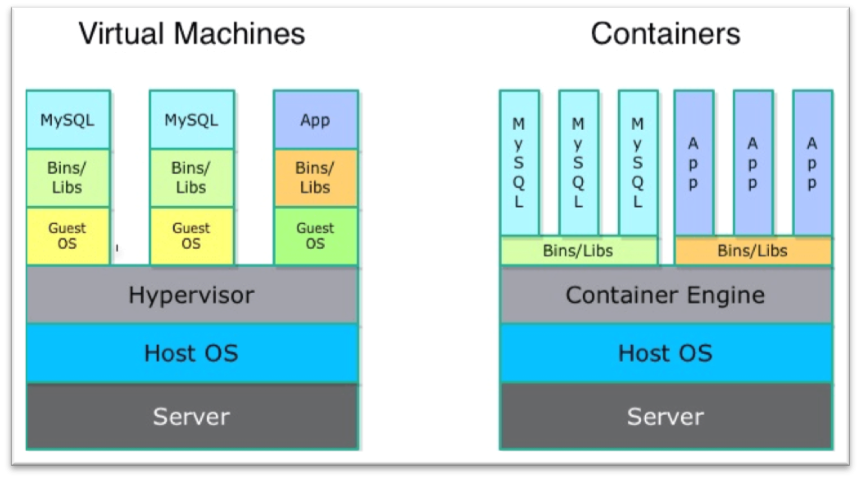

- Containers don’t need to replicate an entire operating system, only the individual components they need to operate. This gives a significant performance boost and reduces the size of the application. They also operate much faster, as unlike traditional virtualization the process is essentially running natively on its host.

- Containers have also sparked an interest in microservice architecture, a design pattern for developing applications in which complex applications are broken down into smaller, composable services that work together. Each component is developed separately and the application is then simply the sum of its constituent components. Each service can live inside of a container and can be scaled independently of the rest of the application, as the need arises.

- A bit about Docker platform: It’s a tool/utility designed to make it easier to create, deploy and run applications by using containers. It is designed to benefit both developers and system administrators, making it a part of many DevOps toolchains. For developers, it means that they can focus on writing code without worrying about the system it will be running on. Containers allow developers to package up an application with all of the artifacts it needs, such as libraries and other dependencies, and ship it all out as one package. Developers can rest assured that the application will run on any other Linux machine regardless of any customized settings that machine might have that could differ from the machine used for writing and testing the code. For operations staff, Docker gives flexibility and potentially reduces the number of systems needed because of its small footprint and lower overhead.

- Docker is different from standard virtualization; it is operating system-level virtualization. Unlike hypervisor virtualization, in which virtual machines run on physical hardware via an intermediation layer (hypervisor), containers instead run user space on top of an operating system’s kernel. That makes them very lightweight and fast.

- Benefits of containers:

- Isolating applications and operating systems through containers.

- Providing nearly native performance as container manages allocation of resources in real-time.

- Controlling network interfaces and applying resources inside containers.

- Limitations of containers:

- All containers are running inside the host system’s kernel and not with a different kernel.

- Only allows Linux “guest” operating systems.

- A container is not a full virtualization stack like Xen, KVM or libvirt.

- Security depends on the host system; hence, containers are not secure.

- Risks of containers:

- Container breakout: If any container breaks out, it can allow unauthorized access across containers, hosts or data centers etc., thus affecting all the containers hosted on the host OS.

- There could DDoS and cross-site scripting attacks on public-facing containers hosted applications.

- A container being forced to use up system resources in an attempt to slow or crash other containers.

- If any of the compromised containers attempt to download additional malware, or scan internal systems for weaknesses or sensitive data, this can affect all the hosted containers, using unsecure applications to flood the network and affect other container.

Now we know what containers are and why we need them. Deploying many containers does require sophisticated management, though. Luckily, there is a solution that simplifies this: Kubernetes. In my next post, I’ll discuss what Kubernetes has to offer.