Introducing a ‘Security Mesh’ to Protect Kubernetes

Given that the concept of the service mesh has been around for years and has had time to mature, the technological foundation is really now in place to expand this strategy and its principles by introducing the notion of a “security mesh.”

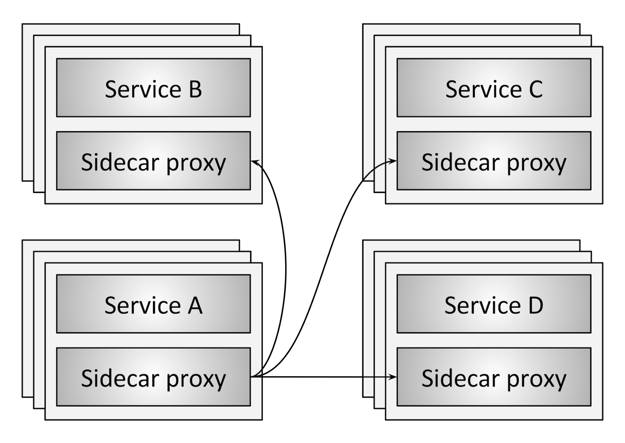

For the not-yet-initiated, a service mesh is a dedicated infrastructure layer that handles communications between services. The popularity of the service mesh concept has risen hand-in-hand with Kubernetes adoption, since the container orchestration system really offers a perfect “sidecar” container structure for pairing services with service mesh functions.

At the same time, traditional security methods can no longer keep pace with the tools and processes used to deliver and deploy applications – let alone the scale and agility demands of modern apps themselves. To produce security solutions designed for cloud-native environments, the structure and principles behind a service mesh might also enable a security mesh to similarly serve this purpose.

Let’s take a quick look at the design principles behind service mesh, and how they may apply to a similar solution purpose-built to deliver security.

At the heart of service mesh design is the end-to-end principle, which champions the benefits of deploying functions at the end nodes of a network, rather than at intermediary nodes such as routers or gateways. This is done because it’s much, much easier to implement reliability and security at the endpoint rather than at some middle point that communications pass through. Therefore, functions such as load balancing, authentication and telemetry should be deployed as close to service applications’ origins as possible (even co-located, when feasible). To do so would mean duplicating and constantly updating software logic for every service—a significant hurdle in the absence of the right container orchestration capabilities. However, utilizing Kubernetes to deploy duplicate sidecar services in the same network namespaces as service applications can solve this issue rather neatly. Those sidecars can then be tasked with handling functions such as traffic management and security.

Security solutions also should be deployed at endpoints in accordance with the end-to-end principle; this creates a security mesh that oversees and helps protect the service mesh. This then allows Kubernetes (or another container platform) to monitor and safeguard applications across their entire life cycles. When an application scales, the security mesh naturally scales as well in conjunction with the application pods. This security mesh also allows for total visibility into application behavior, which is tremendously advantageous from a security perspective for reasons I’ll dig into.

Most service mesh implementations also include another key design feature: separate modules for the control plane and the data plane. The former, for which enterprises can rely on solutions including Istio or Conduit, handles policy routing decisions, manages service-related data across the platform and provides data plane configuration. The data plane, which can be managed using solutions including Envoy, NGINX or Linkerd, performs functions such as network traffic forwarding and management, health checks, content-based routing rules enforcement and authentication. This design is reminiscent of software-defined networking.

A security mesh solution should adhere to these same design principles and differ from traditional firewalls and routers by utilizing separate control and data planes as well. Within the security mesh, the security data plane should handle network traffic inspection, maintaining a high throughput and performance for collecting network and system telemetry data. The security control plane interacts with the platform and data plane to deliver service discovery data, develop application behavior models and enforce security policies. At the same time, terminating the TCP connection at the data plane proxy offers insight into the application protocol used to perform various L4-L7 functions. For instance, HTTP request URLs and headers can be used to route connections to whatever destination that policies dictate.

Putting these visibility and management facets together, a security mesh can fully understand the networking and application activities and behavior within the container environment to identify anomalies and keep applications secure. Attackers can be barred from accessing network communications, thus blocking and limiting attack opportunities. Attempted breaches and exploits can also be recognized through their processes, file access and system resource usage. In this way, abnormal application behavior sends up a red flag that the security mesh can act on to thwart malicious activities, defeating exploit attempts at their earliest stages.